Fuzzing binaries with LLVM's libFuzzer and rev.ng

Technical

In this blogpost, we show how libFuzzer, the LLVM fuzz testing library part, can be employed with rev.ng in order to perform coverage-guided blackbox fuzzing of executable binaries. We also show that our approach is fast, semantic-preserving and simply requires to implement the harness function, as occurs for programs with source code available.

Note that this work is based on the rev.ng lifter, the open-source component at the core of the decompiler we're building. The publicly available version is a bit outdated, but we are in the process of pushing out new versions on a more regular basis. Stay tuned.

Background

Fuzzing is a widespread technique for discovering software security vulnerabilities, where randomly mutated inputs are fed to a target program. It underwent a breakthrough around 2014, with the introduction of AFL and it has been gaining popularity steadly ever since. Its success is in large part due to its effectiveness in identifying real world security vulnerabilities. What makes it innovative is its coverage-based fuzzing approach, which prioritizes inputs that lead to traverse previously unexplored execution paths. This coverage information is used to drive the fuzzing strategy, and the greater the coverage, the higher the chances of finding bugs. To collect coverage information, the input program is instrumented at compile-time. Then, at run-time, new inputs are continuously generated through a feedback-mechanism.

Other state-of-the-art coverage-guided fuzz testing frameworks include libFuzzer and Honggfuzz.

While whitebox (source-available) fuzzing is a relatively consolidated research area, assessing the security of closed-source software is still largely an open challenge. Existing works primarily resort to dynamic binary translation which injects the instrumentation dynamically. Main examples comprise AFL QEMU user-mode and Honggfuzz combined with QBDI. This process is straightforward, but comes with a significant runtime overhead and, consequently, a reduced effectiveness.

Additionally, running a whole program can be sometimes very hard - if feasible at all. For instance, booting a firmware image is not always manageable. In such situations, fuzzing only delimited portions of code might be a winning strategy.

Our approach

rev.ng features a static binary translator, that, given a Linux program compiled for architecture A (e.g., AArch64) can produce an equivalent program for another architecture B (e.g., x86-64), or even for the same architecture A. The input binary code is converted into a higher-level intermediate representation, LLVM IR, which makes it suitable for performing a wide range of analyses and transformations. We have been able to translate and properly execute programs of significant size and complexity such as perl and gcc.

Now, the intuition is that we can leverage the static binary translator to inject the necessary instrumentation to perform coverage-guided fuzzing. This approach is purely ahead-of-time, since both the translation happens and the instrumentation take place offline. This results in significantly reducing the run-time overhead.

More specifically, libFuzzer - which is usually employed in presence of the source code - can be combined with the recompilable LLVM IR module produced by rev.ng. The output is a standalone binary that keeps feeding new inputs to the translated program.

When fuzzing less, the terminal pager, we obtained the following results:

Execs per second | Total execs | |||

|---|---|---|---|---|

1min | 10min | 60min | 60min | |

afl | 3 582 | 3 495 | 3 682 | 13 187 952 |

rev.ng | 15 0617 | 79 701 | 78 306 | 271 217 728 |

Compared with AFL in QEMU-mode, we got speedups ranging from 20× to 40×. Check out our previous paper for a more detailed rundown on performance.

As a result, our approach supports in-memory coverage-guided fuzzing of individual functions even when the source code is not available. Since, by design, one can fuzz, for instance, an ARM binary after translating it to x86-64, it is architecture-independent as well. Moreover we can test distinct portions of code separately, without executing the whole program. This is particularly beneficial, for instance, for self-contained libraries and embedded firmwares.

Here is an overview of the fuzzing process. At the last stage of our optimization passes, the fuzzing framework produces, among other things, a C header declaring all the collected functions for the user. After creating the harness function, the compiler driver is called to build the fuzzer binary.

The fuzzing process

Briefly, our fuzzing framework aims to:

- preserve functional correctness and achieve good performance;

- enable fuzzing of individual functions;

- enable hooking of functions;

- reuse existing sanitizers.

At this stage, these goals are achieved by implementing three LLVM passes that run over the recompilable unit of LLVM IR: an instrumentation pass (PatchReturn), a pass that generates helper stubs and C headers for every lifted function (WrapperGen), and a pass for function hooking when needed (FuncHook).

Functional correctness and performance

Two major challenges typical in static binary translation are preserving both semantics correctness and performance of the original program. To have both, we adopt a two-fold approach, which consists in:

- Collecting all the basic blocks of the original program in a single LLVM function (known as

root). While not extremely fast and prone to less optimizations, this guarantees functional correctness. - Identifying the start and end addresses of every function and isolating their code into distinct LLVM functions (so called isolated functions). This allows for more aggressive optimizations, but may be subject to inaccuracies in the CFG-recovering phase.

The root function is intended to start the execution and migrate it to the appropriate isolated function, where it will stay for most of the time.

A caveat though: since function boundaries detection and data code separation boil down to be an undecidable problem, we need a way to continue flawlessly with the execution if, for instance, an unexpected jump target is met in an isolated function.

Without such a mechanism, semantic correctness would not be guaranteed. To address this issue, we let the code run at full speed while inside an isolated function, and fall back to root only if an unforeseen jump, e.g., a misidentified return instruction, is encountered.

As a comparison, see how the root function and the isolated main (known as revng translated block) respectively look like in the LLVM IR:

As shown in the snippet, the dispatcher inside root is responsible for redirecting the execution to the respective isolated function as appropriate.

Fuzzing of individual functions

All the isolated functions found are possible candidates for fuzzing. Now, we need a way to let the translated program run so that all the initialization routines (e.g., the memory allocator) can be carried out, and pause the execution of the guest (the translated program) when the user-defined entrypoint is met (be it main or whenever we need to back out of the program).

This is what the first instrumentation pass is meant to do, i.e., to allow to return from any isolated function to root. The migration is necessary to give control back to LLVMFuzzerTestOneInput, the function in charge of guiding the fuzzing process.

As an example, suppose we need to initialize the program and return to the fuzzing context only once a startup routine (init_vars) has been executed. The transition back would be the following one:

The snippet below shows how we insert a sentinel variable to make such a transition before returning from init_vars.

As can be seen, the terminator block rtb_init_vars.0x1d, which is supposed to return to main, is no longer reachable - instead, we return to root, which ultimately returns to LLVMFuzzerTestOneInput.

The second pass, on the other hand, generates a wrapper around each isolated function in the form of a C header (generated_wrappers.h), thus allowing normal function invocation without inspecting the ABI used by the original program.

Note, though, that we cannot invoke the isolated function directly: we still need to pass through root in order to maintain the functional correctness.

So, for every isolated function, whose prototype is retrieved through dedicated analyses, we set up the emulated program counter (which points to the respective isolated function), the emulated stack pointer, and the function arguments. If the function is supposed to return, a struct with the possible arguments to be returned is filled.

Hooking functions

The third pass allows the user to easily replace existing functions (like malloc, free, send, recv and so forth). This turns out to be useful when we want to add user-defined callbacks, logging or inject raw data, e.g., when a function expects data coming from a socket.

For every isolated function, we take the following steps to perform function hooking:

- make its symbol have weak linkage;

- clone it;

- wrap up the original implementation in a dedicated function.

This allows us to bypass the One Definition Rule. Hence, if a symbol is redefined, it will override the original one, but we are still able to call the original function. We can hook a function by adding its redefinition in a support file, which is linked with the IR module, as shown below.

Sanitizers

We were also able to successfully combine fuzzing with sanitization by utilizing two passes that LLVM ships with. This increases the effectiveness of the testing process considerably.

The first one is SanitizerCoverage (SanCov), which monitors the paths that are being exercized by inserting callbacks that implement coverage tracking at different levels (e.g., block coverage and edge coverage).

One of the most interesting sanitizer for fuzzing, is AddressSanitizer (ASan), which provides a sound way to detect memory corruptions. It works by surrounding dynamic memory allocation with red zones, extra unaddressable memory, and instrumenting every load/store operation to check for out-of-bounds accesses. Temporal bugs, like use-after-free issues, are checked too by restricting the use of freed allocations, so that these regions cannot be immediately reclaimed. Note that stack-based objects are not poisoned, since it is both non-trivial to achieve and a return address overwrite would be likely caught anyways.

Below is how the support file resembles when we manually replace default malloc/free with the ASan-instrumented ones:

Unlike the hooking example, this time, since we are not returning to a LLVM function, we need to pop the emulated program counter off the stack in order to return to the caller - while continuing to return the value. This is embedded in the EMULATED_RET macro.

Execution flow

libFuzzer's execution begins with the fuzzing target LLVMFuzzerTestOneInput, which is repeatedly called with the mutated input buffer.

Within this function, an initialization routine (InitializeFuzzing) is called the very first time, which reserves a portion of memory that is meant to emulate the stack of the original program. It also initializes some other components, including the heap page and the SIGSEGV handler.

Then, root starts the program.

Here is a simplified version of InitializeFuzzing:

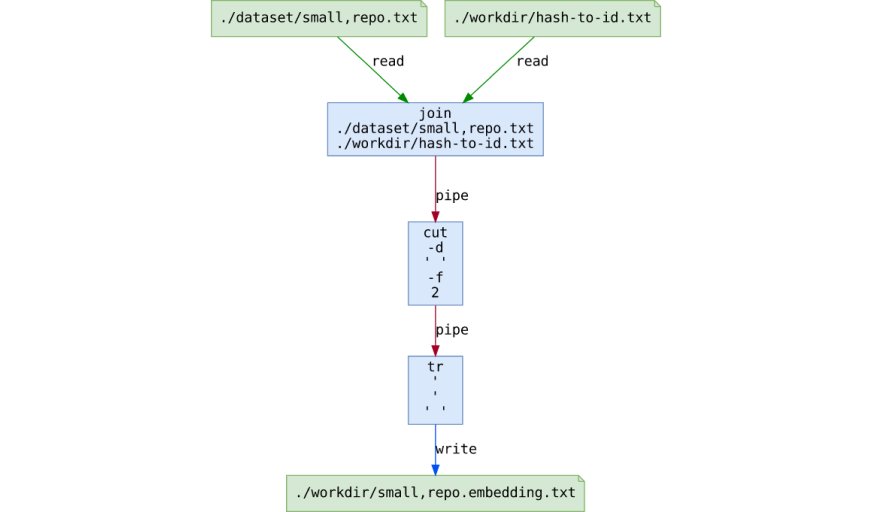

Compilation stage

During the translation process, the LLVM optimizer runs all our transformation passes on the lifted IR. The support file containing LLVMFuzzerTestOneInput and the redefinition of the functions to be hooked is compiled to LLVM IR as well and linked to the translated code to form a single LLVM bitcode file, which is going to be lowered back to object code.

For better fuzzing performance, llc, the LLVM static compiler, is called with optimization level -O2.

Eventually, the full LLVM optimization pipeline looks as follows:

Fuzzing the PCRE library

As a case study, we tried to reproduce a stack-based buffer overflow in the Perl Compatible Regular Expressions library (CVE-2015-3217). For our analysis, we built a flawed version of less as a static executable for GNU/Linux on x86-64. Note that from now on, we are in the same position of an analyst looking at the program for the first time (blackbox setting). Suppose that the analyst, after a reverse engineering phase, identified the relevant libpcre targets to be fuzzed, which are:

pcre_compile, that takes a string, compiles it to a regular expression object and returns it;pcre_exec, that runs the previously compiled regular expression on a string;free, that frees the compiled regular expression object.

This is how the body of LLVMFuzzerTestOneInput looks like:

All the srtf_* functions (safe revng translated functions) are the generated wrappers around the isolated ones, which are now directly callable from the C header.

Prior to extending the rev.ng capabilities with automated fuzzing, calling functions required to manually prepare arguments in the appropriate registers, as shown here below:

After lifting and linking it all, we ran the executable and made it crash a few seconds later, thus finding the bug:

Conclusions

We have seen that the main advantage of performing fuzzing with rev.ng is that one can easily write the harness function (LLVMFuzzerTestOneInput) in C/C++ by calling the recovered functions of the binary with low performance impact, even with the lack of source code - like in a whitebox scenario.

The next work consists of enhancing the underlying ABI-agnostic analysis to improve the runtime. Initially, this involves promoting a set of variables representing the CPU state, from global (current situation) to local variables, in order to reduce the memory traffic and allow for more intraprocedural optimization. On top of this, we are planning an ABI-specific layer to further refine the results of previous analyses.

Note also that, as of now, variadic functions are supported by manually passing them the number of arguments to be pushed onto the stack. This constraint can be relaxed in future work.

Finally, while we made our analyses as precise as possible, our approach still has some limitations that are inherent to static analysis. In practical tests though, under certain conditions, this results in marking some formal parameters of the original function as alive when they may be actually dead. So, while this is suboptimal, an analyst can easily mark the possible dead arguments as unused. Such cases will be minimized with the ABI-specific analysis.